Fundamental issues are forcing crazy workarounds.

Problems with HTTP connections:

- Expensive to make (slow start and handshake, consider SSL)

- Essentially limited to one transaction at a time with high latency

- Even when pipelined suffers from head of line blocking nightmares

- Always originated at the client

- There are way too many of them

The most fundamental change of SPDY vs HTTP is that SPDY multiplexes as much as possible over a single busy connection instead of using lots of short lightly used connections.

Much of this talk will be about why we need to move beyond the way HTTP currently handles connections. SPDY is one way to do that.

- Enhancing policy (2, 4, 6, ..?)

- Subverting policy through sharding

- Speculative pre connections

- Long lived hanging-get, comet, etc.. connections

And also by artificially reducing transaction count:

- CSS sprites

- Inlining js and css

- data: URLs

But it is crude, hand driven, cache-unfriendly, and does not decompose well.

- The New York Times does it - 83 connections are used loading the home page

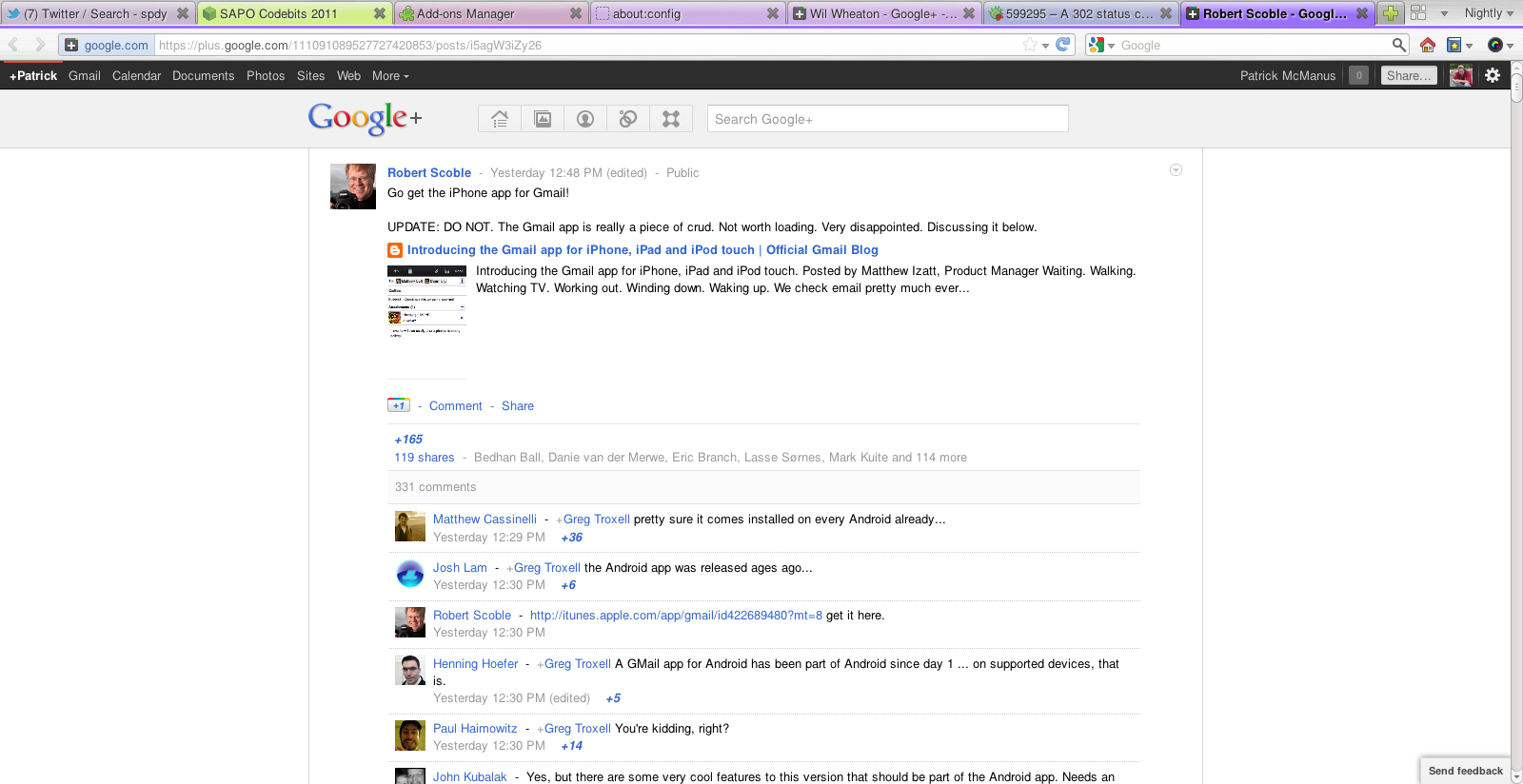

- Google Plus does it - 64 connections are commonly used to load a post there

- Facebook too - 75 connections to view a photo gallery

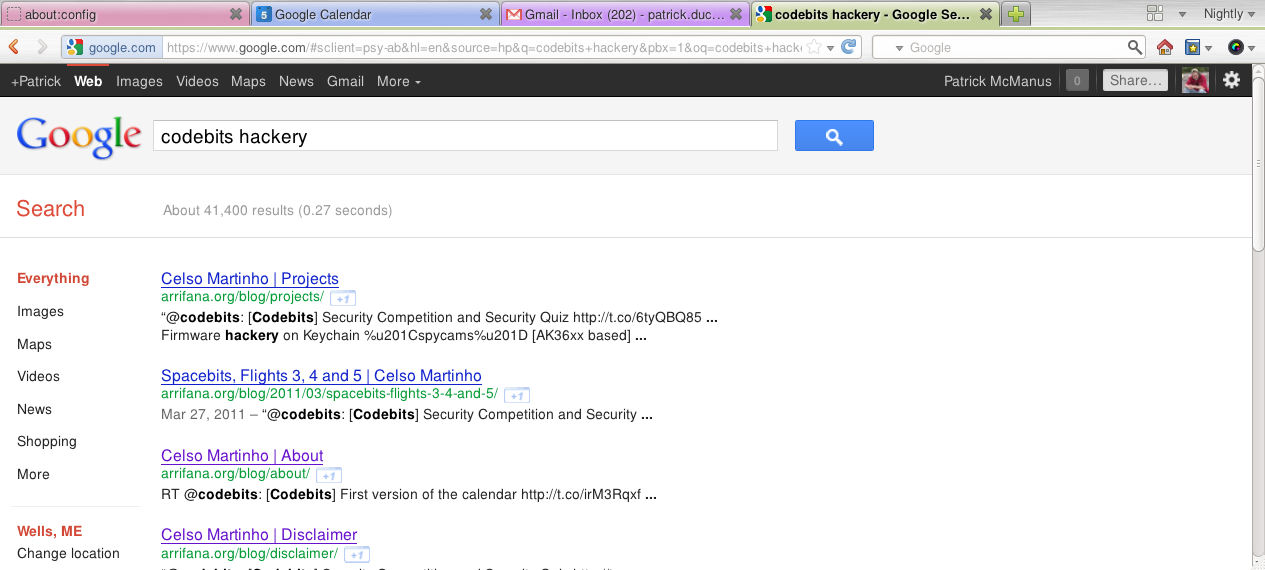

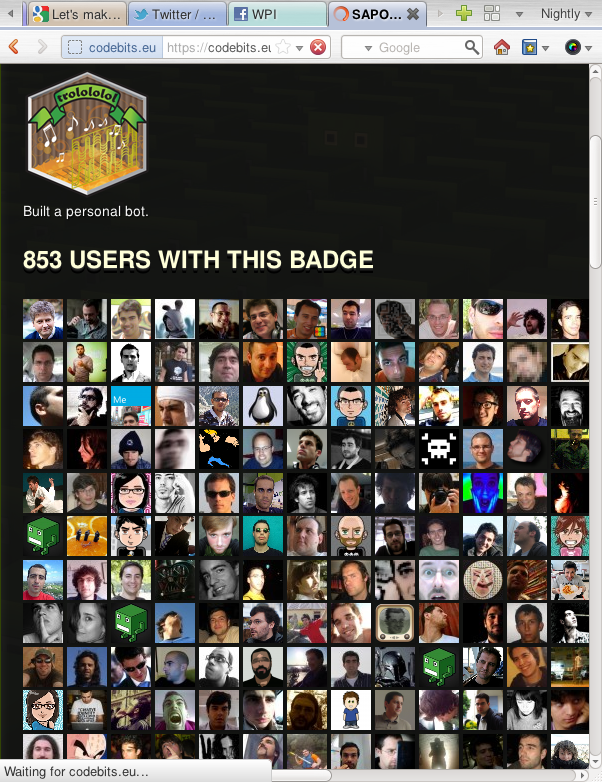

This is more prominent among the more highly optimized sites. It is not an automated behavior. For example - codebits has pages full of dozens of tiny badges, but it does not shard and as a result incurs very long queuing delays loading pages.

The Internet tail is very long and HTTP does not serve it well without a lot of hand tuning. But at least for the big sites sharding is a big deal.

A typical highly optimized highly sharded application. Do others like it come to mind?

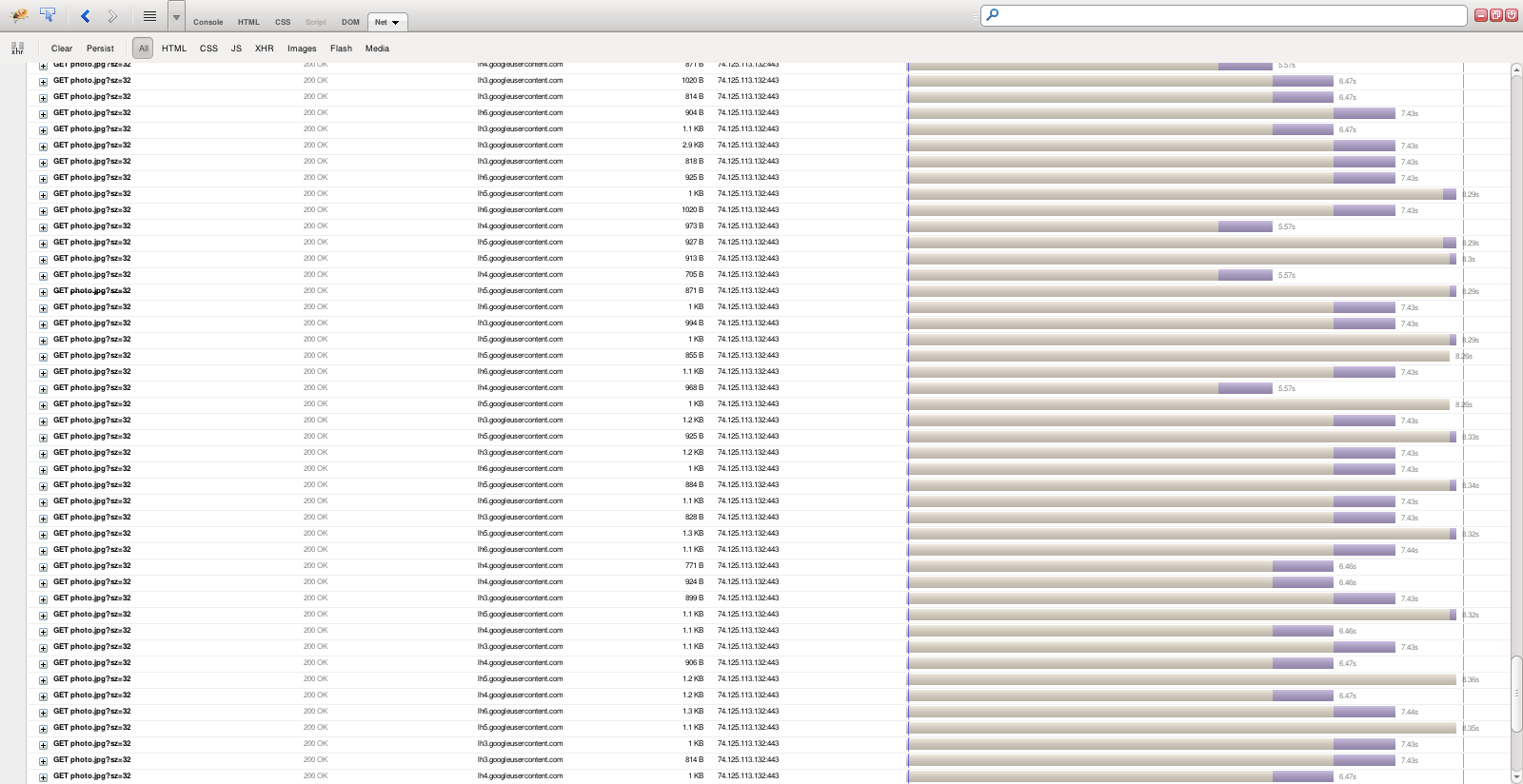

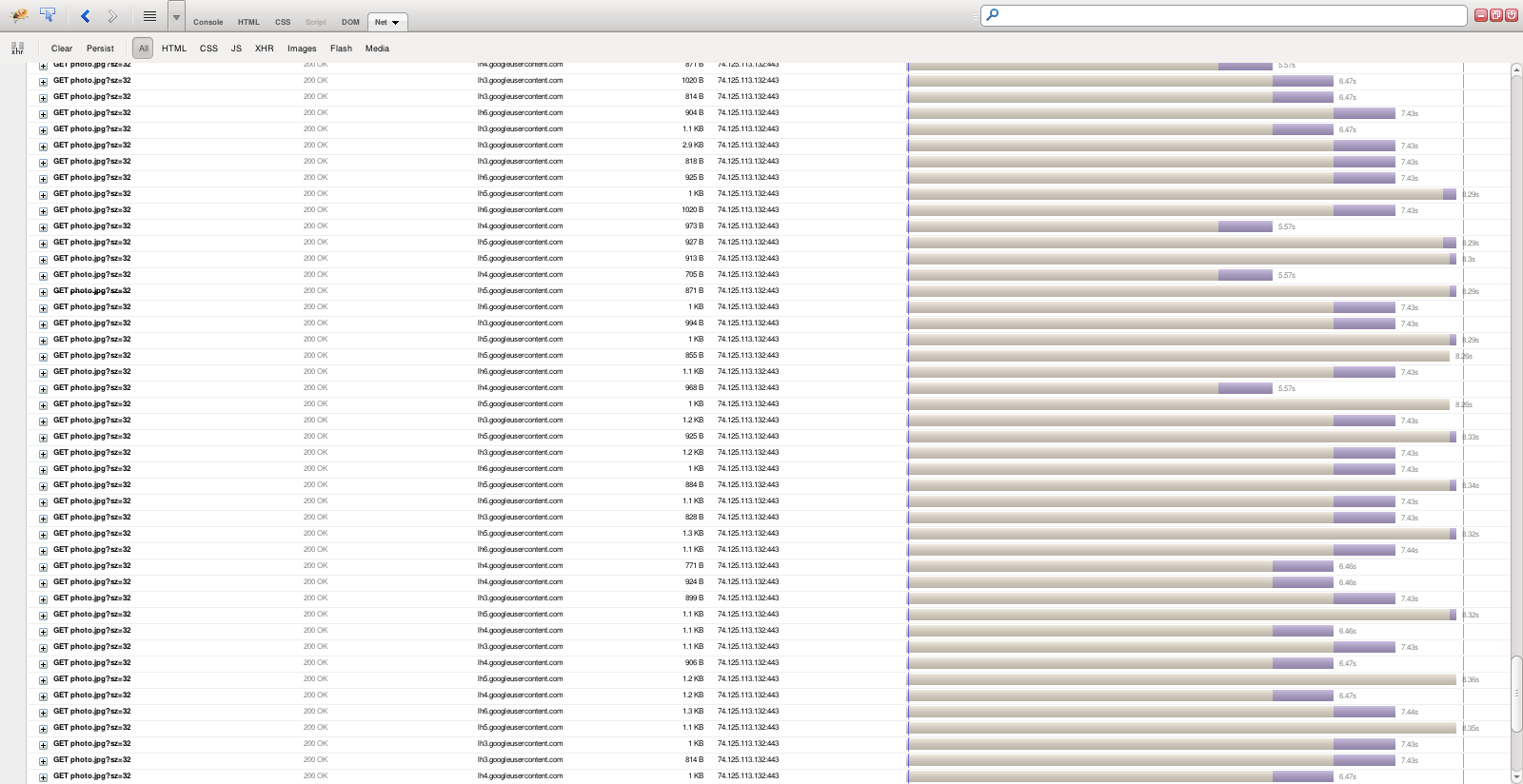

Even using broadband and over 2 dozen sharded connections there are still long queue times for embedded objects. The rest of the Internet is far worse beyond the best funded sites - as latency gets higher and the optimizations are not as advanced.

Sharding helps HTTP, but the end result is still poor.

Even if it performed fast enough there are very important reasons to not incentivize connection proliferation for the health and growth of the Internet.

The problems with adding more connections are:

- TCP connections have high latency to set up

- Every unique hostname requires a DNS lookup and a RTT

- Control packets require more processing than data packets

- Being mistaken for a SYN flood really ruins your day

- NATs, firewalls, and DPI limit #'s in opaque and mysterious ways.

- Connection state matters

- The impact of large numbers of parallel connections on TCP congestion control performance can cause complications for other applications

I want to focus on that last one for a minute.

This conservative period where the sender is growing into the amount of bandwidth actually available is known as slow start and it dominates most web transfers because individual web resources are too small to see it through to steady state. RTT becomes the dominant factor in transfer time.

But you knew that.

This is a primary driver behind HTTP concurrent connections - the slow start window is cheated by the same amount of concurrency. The result is more aggressive sending.

That is reckless.

If this non-probed sending rate is too great then chaos ensues.

This is a real problem for HTTP - but it isn't widely understood because of the massive amount of buffering done on the Internet.

That buffering leads to queueing delays in the network instead of at the client. In the network they effect everyone.

These long queues are awful for real time applications trying to share the link. If you are having a phone call, video chat, or trying to play a real time game all of your data needs to traverse (and wait in) that same queue. QoS could help here, but there is realistically no QoS on the Internet.

Jim Gettys calls this bufferbloat - you've probably read about it - and HTTP parallelism is a significant contributor.

Removing buffering isn't really an option for traditional HTTP flows because they are so small.

Reducing the number of connections would be a good first step.

Other than SPDY, Websockets deserves special mention.

It is not general purpose, but it is low latency, low overhead, and bi-directional.

Other approaches at a level parallel to TCP (these have to deal with additional operational burdens of integrating into the OS and IP stack):

- SCTP

- DCCP

- UTP (Torrent)

- A web transport protocol developed by Google.

- First published November 2009.

- There is an open protocol definition but standards body work is nascent

- Full support on the client side in Chrome is available

- SPDY is deployed on most Google servers

- Firefox is expected to have experimental support in Firefox 11

- Amazon Fire tablet reportedly will use SPDY as part of the client side of its split architecture

- A couple commercial CDNs and application accelerators do SPDY to HTTP gateways

- Quality general server side support is less common but is a high priority.

- HTTP compatible.

- One TCP session instead of parallel HTTP connections via stream IDs.

- Client can create a stream on live connection without a RTT.

- Streams multiplex with small (<4KB) chunks to solve Head of Line problems.

- Streams can be prioritized

- SPDY automatically de-shards.

- All connections are made with SSL. Securing the web, finally.

- HTTP headers are compressed with specialized scheme.

- All SPDY implementations are gzip encoding capable

- Binary framing for efficiency and prevention of smuggling attacks

- Aggregated connection is more TCP friendly than HTTP

- Error codes to help identify non-processed requests

Elephant flows interact better with TCP congestion control because:

- They are long enough to establish a legitimate probed congestion window state

- TCP losses are more likely to trigger fast retransmit in longer flows

- A congestion event is more likely to address the correct flow.

HTTP queues - reviewed.

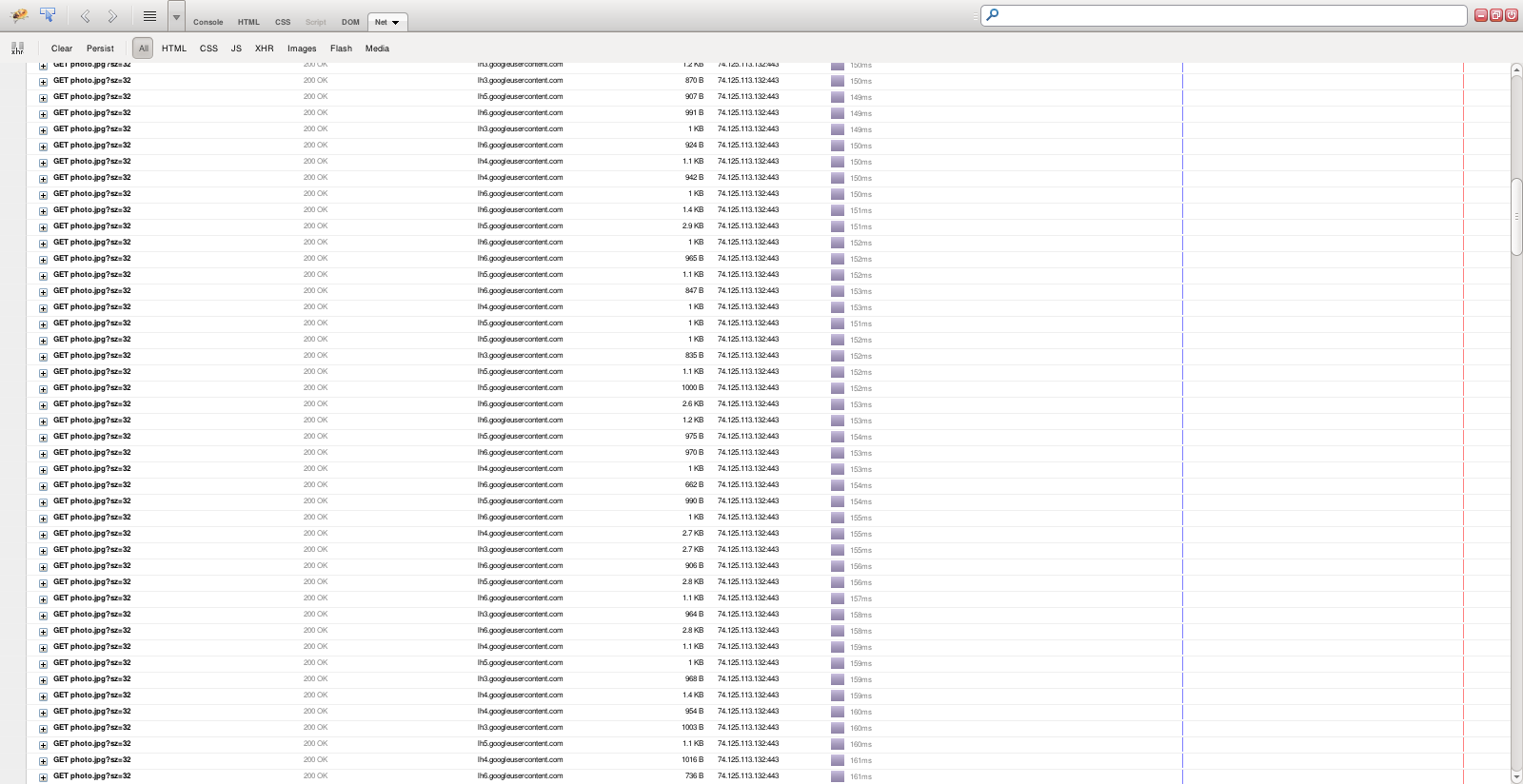

The payoff. Same website as the previous waterfall diagram.

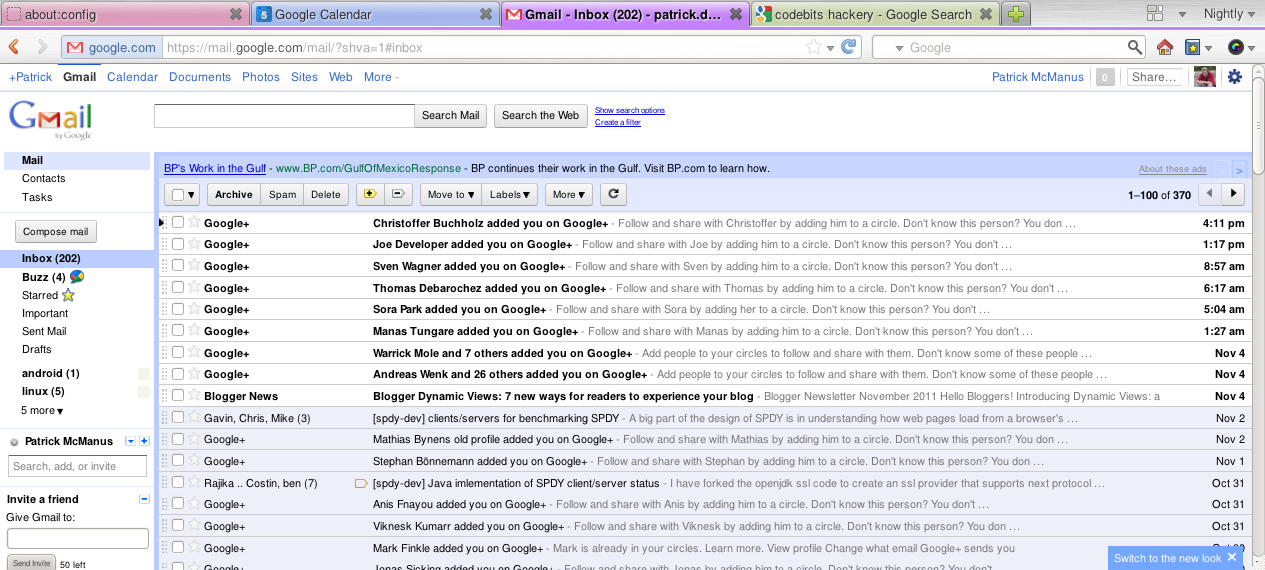

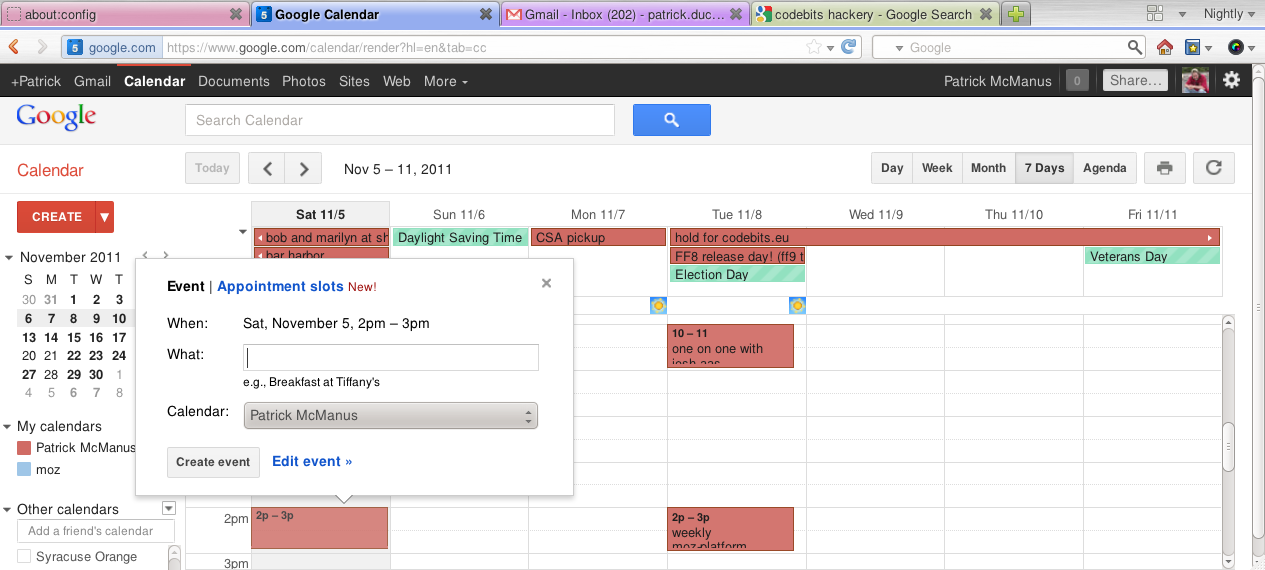

You probably use SPDY today if you are running Chrome and connecting via SSL to most of the Google services such as gmail, gcal, google plus or search.

Sites that report information to Google analytics over SSL use SPDY too.

Google just announced that logged in users accessing search will be redirected to https. That will transparently mean SPDY for SPDY enabled browsers.

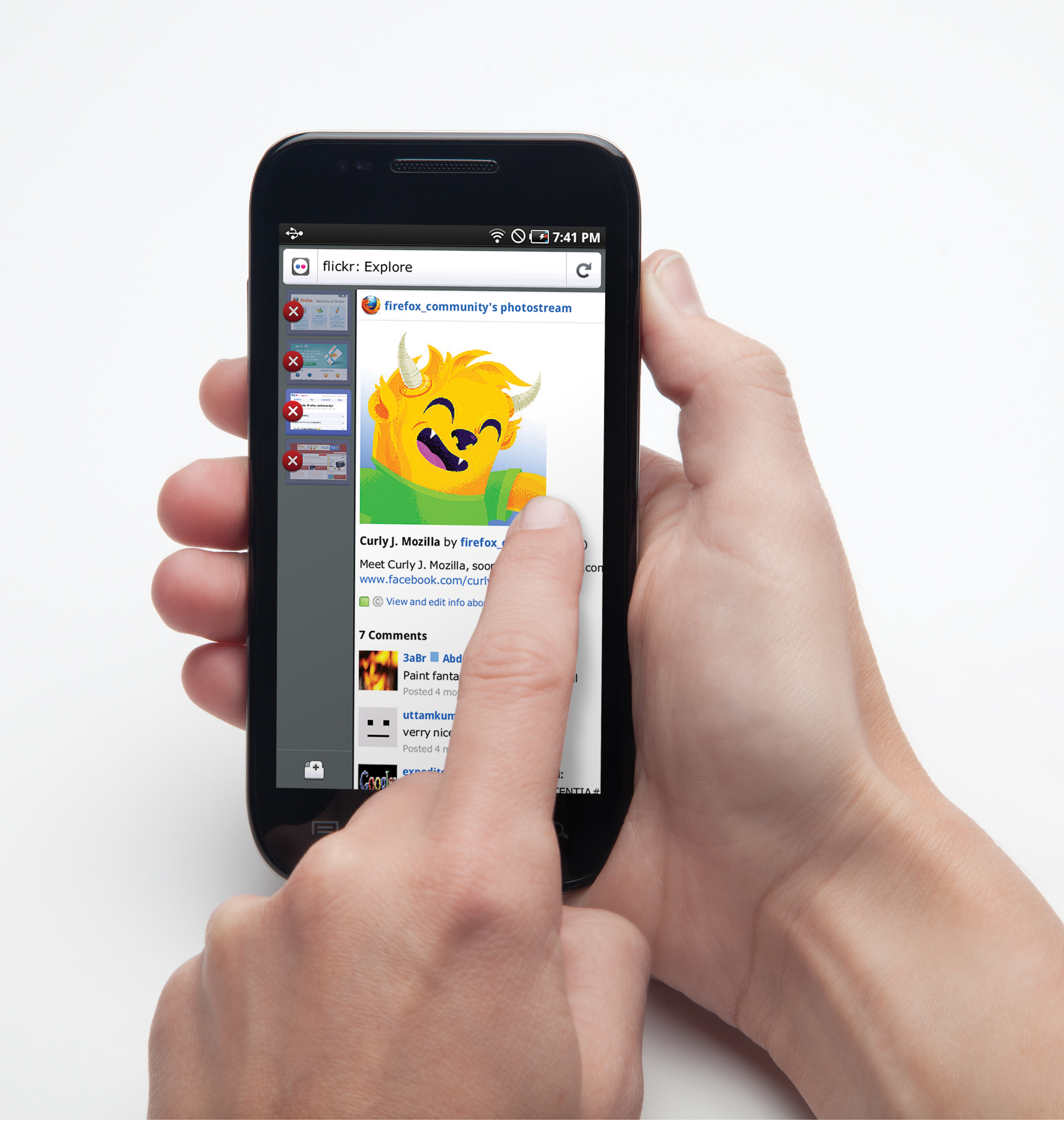

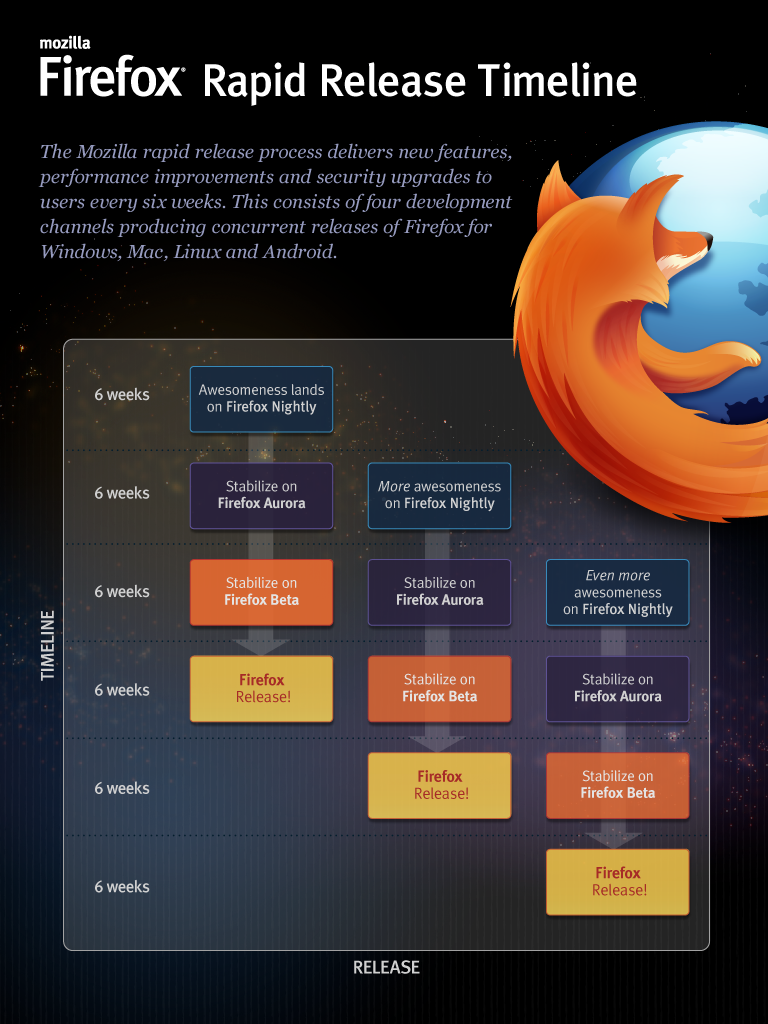

I expect SPDY will be part of Firefox 11. It may or may not be on by default in that version - too soon to tell. The hope is that it will show up (requiring a prefernece tweak to enable) in the Firefox Nightly builds the week of November 14th.

|

|

That same schedule applies to mobile Firefox. And thanks to our rapid release cycle we can iterate rapidly and get it out to users faster.

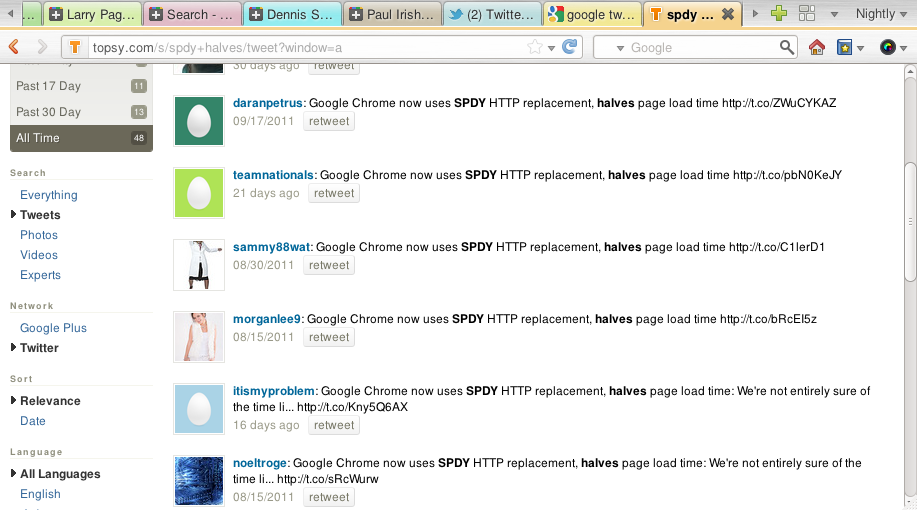

I've heard on the interweb that pages load faster with SPDY. Is it true?

Typical Case: A site with a more typical number of sub-resources that is not highly sharded. In my experience of a 5 to 15 percent improvement in page load time is normal once all the connections have been established.

Worst Case: Compared to an extremely highly sharded web resource over low latency broadband, the benefits of SPDY page load times are fairly minimal. Your primary wins come from header compression at that point.

This is consistent with google's server side comparison data shared at last spring's Velocity conference.

Even if you see modest improvements in page load times remember you still have better queue management, more responsive interfaces due to cancellation operations, and fewer connections to manage both on the client and server.

Also don't forget the first click - If HTTPS requires 36 parallel SSL connections that is a very CPU intensive operation that SPDY can accomplish with 1 connection. Lower power processors especially appreciate that.

No new URLs or other markup changes are necessary (https:// will work for both spdy and non-spdy enabled clients).

Upgrading from plain-text HTTP involves the Alternate-Protocol response header.

This double dependency is a bit of a problem for SPDY adoption.

Two Reasons SPDY is always over SSL

- Forcing function for security.

- Clear path in SSL tunnel on a well connected port.

SPDY does a very good job compressing headers. It does this by

- Seeding an out of band compression dictionary with well known header names

- Normalizing the case of header names

- Never passing bodies through the compression stream

The results are impressive.

- 77% of headers are reduced 90% or more in size

- 94% of headers are reduced at least 80% in size

- The average header is reduced from 638 bytes to 59 bytes - over 91%. That's over 1KB per transaction and there are 43 HTTP transactions per average page!

Due to header compression, Google seees total download bytes reduced by 4% and upload bytes by 51%.

Google's server side data (again, from Velocity conf) shows the connections per page dropping by a factor of 4. This manifests itself in increased parallelism

Generally pages with lots of tiny resources are going to create (and benefit the most) from the parallelism. Can you think of any sites not yet using SPDY that would benefit from that?

|

|

|

Some head of line blocking impacts:

- Large responses block the transfer of small important ones such as stylesheets

- Resources with large think times (e.g. database lookups) block transfer of quickly accessed resources

- Requests cannot be canceled without creating a new TCP connection

The log sample below shows such severe reordering of responses over SPDY that the same sequence over HTTP would have incurred 225ms of induced head of line blocking latency. 36 other response headers were processed during that interval.

- Not yet standardized

- End to End SSL makes for a poor fit for hierarchical caching.

- Debugging this is challenging

- Where are the server implementations?

The IETF is the logical forum - Google has always been receptive to that. This was discussed as part of the IETF HTTP Working Group in Prague last April - and the answer from Google was the same as it always has been. To paraphrase: "We'll do it when there is enough demand and offers of help so that it really is a community effort". There isn't much point in writing a community standard when only one participant has working experience on the topic.

We're working on this together. Come talk to me if you're interested, or keep your eyes on the usual locations.

- Per Stream Flow Control

- Server Push

There are also secuirty considerations to be thought deeply about concerning:

- IP Pooling (used to de-shard)

- the Alternate-Protocol HTTP header

There are some frameworks that support it (node-spdy, spdy_server.rb, web-page-replay) reasonably well, but there is not a general purpose web server of high quality. There is a mod-spdy for Apache but it does not multiplex at all, which is probably the most important attribute of the protocol.

Server development is no doubt held back by the NPN dependency in SSL and the lack of its standard inclusion in security libraries. Patches do exist for openssl, and as part of the Firefox and Chrome work it will be merged into the standard NSS distribution very soon.

Server support is a major priority for me, and when the Google SPDY team announced their fourth quarter goals they cited it as well. If anyone in the room would like to help let's talk and do it together.